[SD][ML] ControlNet in StableDiffusion: A Comprehensive Guide

Overview

ControlNet is an innovative addition to the StableDiffusion model that enhances the model’s ability to generate high-quality images with specific control over the content and structure. This guide delves into the fundamentals of ControlNet, how it works, implementation details, and training your own ControlNet. The following sections provide an in-depth look at each aspect of this powerful tool.

source: https://arxiv.org/abs/2302.05543

source: https://arxiv.org/abs/2302.05543

What is ControlNet?

ControlNet is a neural network structure that adds conditional control to StableDiffusion models.

It allows users to influence the generation process of the diffusion model by injecting additional information, such as edge maps, poses, or depth maps, into the model. This ensures that the generated images adhere more closely to the desired specifications, leading to more accurate and contextually relevant outputs.

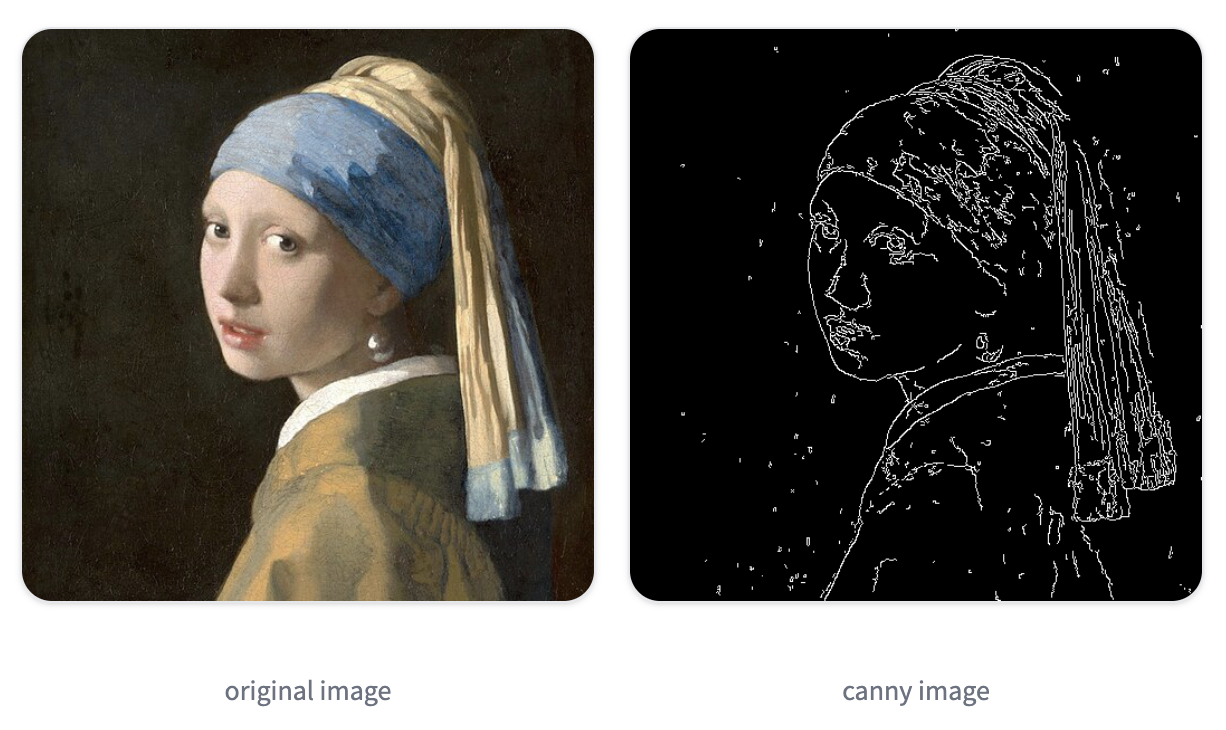

Use OpenCV to capture the cany edge from an image:

source: https://huggingface.co/docs/diffusers/using-diffusers/controlnet

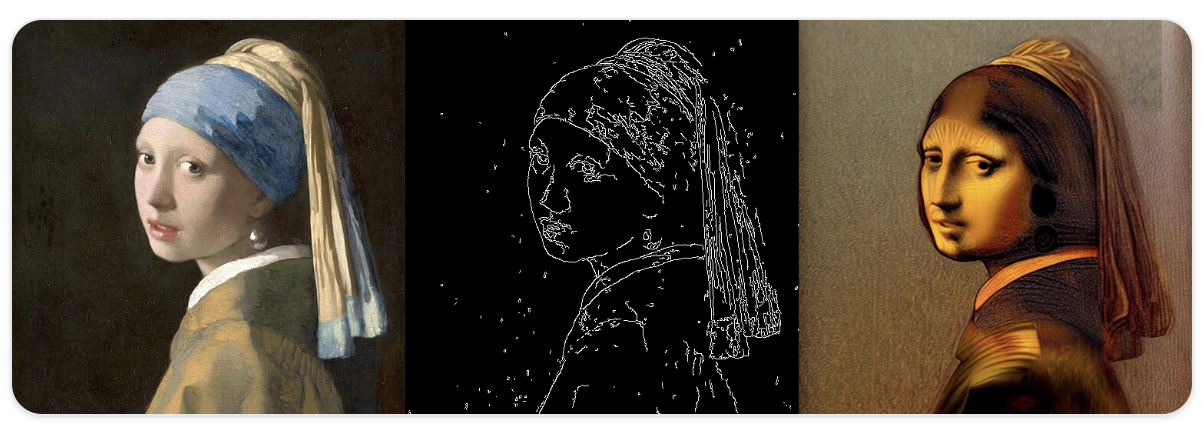

Use cany edge as a condition input and use ControlNet to generate a new image:

source: https://huggingface.co/docs/diffusers/using-diffusers/controlnet

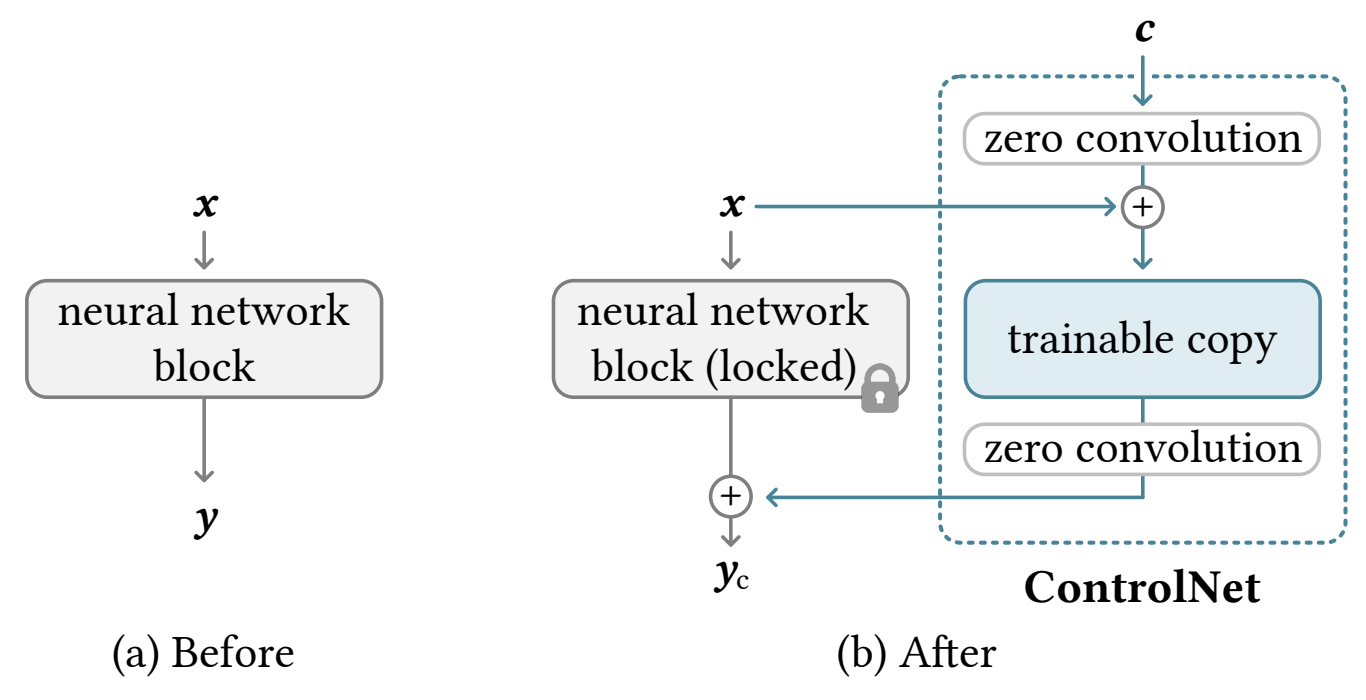

ControlNet Structure (1.0)

ControlNet is a neural network structure to control diffusion models by adding extra conditions.

source: https://arxiv.org/abs/2302.05543

source: https://arxiv.org/abs/2302.05543

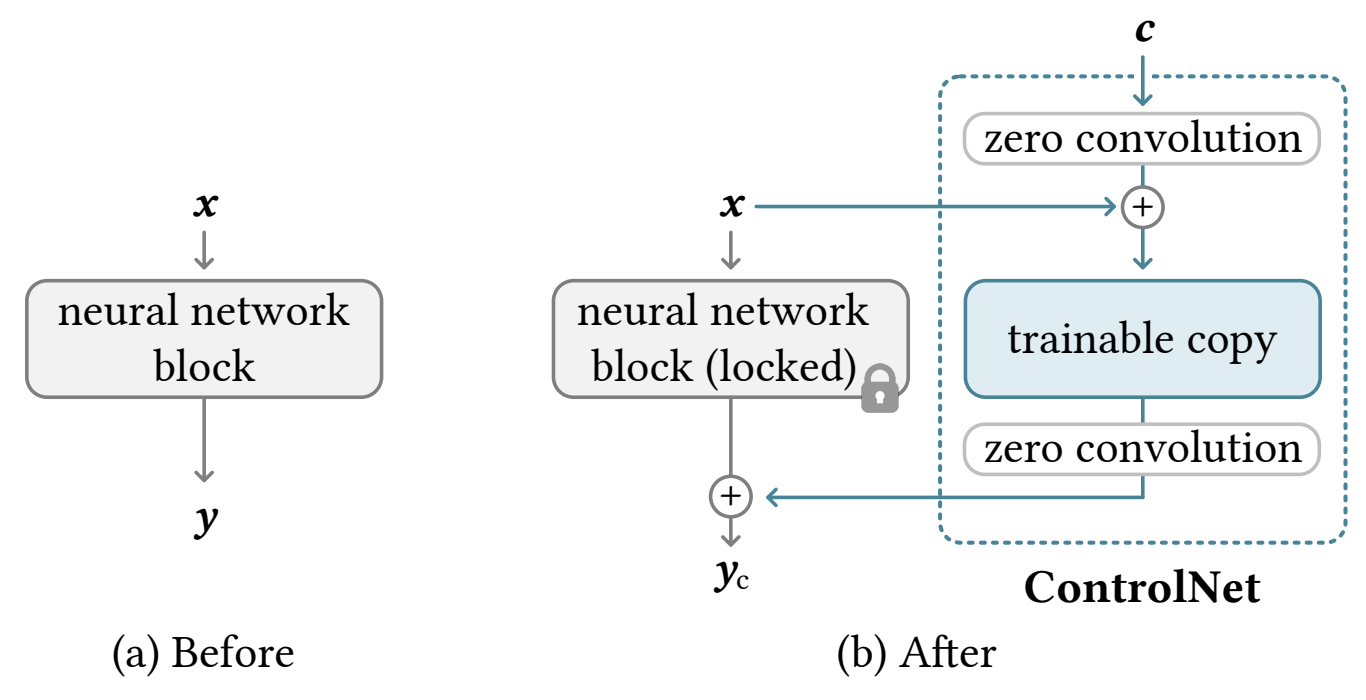

- It duplicates the weights of neural network blocks into two copies: one “locked” and one “trainable.”

- The “trainable” copy adapts to your specific condition, while the “locked” copy maintains the integrity of your original model.

- This approach ensures that training with a small dataset of image pairs won’t compromise the production-ready diffusion models.

- The “zero convolution” is a 1×1 convolution with weights and biases initialized to zero.

- Prior to training, all zero convolutions produce zeros, preventing any initial distortion from ControlNet.

- No layers are trained from scratch; instead, you are fine-tuning, keeping your original model intact.

- This method allows for training on small-scale or even personal devices.

After initialization, the untrained ControlNet parameters should be as follows:

$$

\left\{

\begin{array}{l}

\mathcal{Z}\left(\boldsymbol{c} ; \Theta_{\mathrm{z}1}\right) = 0 \\

\\

\mathcal{F}\left(x + \mathcal{Z}\left(\boldsymbol{c} ; \Theta_{\mathrm{z}1}\right); \Theta_{\mathrm{c}}\right) \\ = \mathcal{F}\left(x ; \Theta_{\mathrm{c}}\right) \\ = \mathcal{F}(x ; \Theta) \\

\\

\mathcal{Z}\left(\mathcal{F}\left(x + \mathcal{Z}\left(\boldsymbol{c} ; \Theta_{\mathrm{z}1}\right); \Theta_{\mathrm{c}}\right); \Theta_{\mathrm{z}2}\right) \\ = \mathcal{Z}\left(\mathcal{F}\left(x ; \Theta_{\mathrm{c}}\right); \Theta_{\mathrm{z}2}\right) = 0

\end{array}

\right.

$$

- That is to say, when ControlNet is untrained, the output is 0, so the numbers added to the original network are also 0.

- This has no impact on the original network, ensuring that the performance of the original network is fully preserved.

- Subsequent training of ControlNet only optimizes the original network, which can be considered equivalent to fine-tuning the network.

ControlNet in StableDiffusion

source: https://arxiv.org/pdf/2302.05543

source: https://arxiv.org/pdf/2302.05543

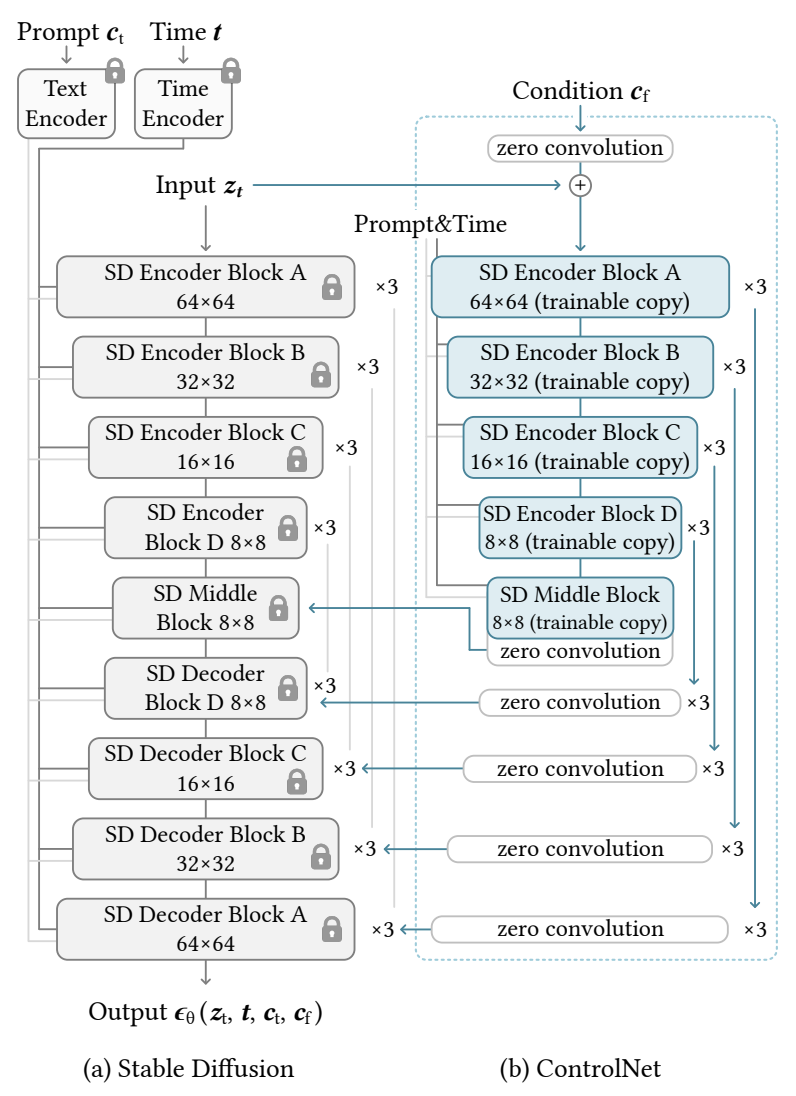

The previous section described how ControlNet controls individual neural network blocks. In the paper, the authors used Stable Diffusion as an example to explain how ControlNet can control large networks. The following figure shows that the process of controlling Stable Diffusion involves copying and training the encoder while using skip connections in the decoder.

Before proceeding, it is important to note:

Stable Diffusion has a preprocessing step where a 512×512 image is converted to a 64×64 image before training(for computing efficient purpose). To ensure that the control conditions are also mapped to the 64×64 conditional space, a small network $\mathcal{E}$ is added during training to convert the image space conditions to feature map conditions.

$$c_f = \mathcal{E}(c_i)$$

This network $\mathcal{E}$ is a four-layer convolutional neural network with 4×4 kernels, a stride of 2, and channels 16, 32, 64, 128, initialized with Gaussian weights. This network is jointly trained with the entire ControlNet.

Workflow Wrapup

- Input Processing: ControlNet takes an additional input, such as a sketch or a pose, along with the standard textual input.

- Feature Extraction: It extracts features from the control signal using a separate encoder.

- Conditional Guidance: These features are then combined with the features extracted from the text prompt.

- Diffusion Process: The combined features guide the diffusion process, ensuring that the generated image aligns with both the text and the control input.

- This mechanism allows for precise control over the content and structure of the generated images, making ControlNet a powerful tool for various applications, from art creation to realistic image synthesis.

How to implement?

Install the required libraries:

1

pip install diffusers transformers

Load the StableDiffusion and ControlNet models:

1

2

3

4

5

6

7

8from diffusers import StableDiffusionPipeline, ControlNetModel

# Load the models

stable_diffusion_model = StableDiffusionPipeline.from_pretrained("CompVis/stable-diffusion-v1-4")

controlnet_model = ControlNetModel.from_pretrained("lllyasviel/controlnet")

# Integrate ControlNet with StableDiffusion

stable_diffusion_model.unet.load_additional_model(controlnet_model)Prepare the inputs

1

2

3

4

5

6

7

8

9from PIL import Image

import torch

# Load and preprocess the control image (e.g., an edge map or pose)

control_image = Image.open("path_to_control_image.jpg").convert("RGB")

control_tensor = torch.tensor(control_image).unsqueeze(0) # Convert to tensor and add batch dimension

# Prepare the text prompt

prompt = "A futuristic cityscape with towering skyscrapers"Generate the image

1

2

3

4# Generate the image with ControlNet guidance

generated_image = stable_diffusion_model(prompt=prompt, control_image=control_tensor)

generated_image.save("output_image.jpg")

# display(generated_image)

This code demonstrates the basic implementation of ControlNet with StableDiffusion, highlighting the simplicity and flexibility of integrating control signals into the image generation process.

Conclusion

ControlNet offers a significant advancement in the field of text-to-image generation, providing precise control over the generated content. Its ability to integrate various control signals into the diffusion process makes it an invaluable tool for artists, designers, and researchers. By understanding its working principles, implementing it in your projects, and even training your own ControlNet, you can harness the full potential of this innovative technology to create stunning and accurate visual content.

References

- Adding Conditional Control to Text-to-Image Diffusion Models

- ControlNet GitHub

- ControlNet: A Complete Guide

- ControlNet原理解析 | 读论文

- ControlNet HuggingFace

- Train your ControlNet with diffusers 🧨