[NLP][ML] Transformer (1) - Structure

Overview

The Transformer is a deep learning architecture introduced in the paper “Attention Is All You Need” by Vaswani et al. in 2017. It revolutionized the field of natural language processing (NLP) and brought significant advancements in various sequence-to-sequence tasks. The Transformer architecture, thanks to its attention mechanisms, enables efficient processing of sequential data while capturing long-range dependencies.

Transformer is a Seq2Seq(Sequence to Sequence) model. It uses Encoder-Decoder structure

Below is a simple diagram:

Source: https://ai.googleblog.com/2016/09/a-neural-network-for-machine.html

The line between Encoder and Decoder represents the “Attention”.

The thicker the line, the more the Decoder below pays more attention to some Chinese characters above when generating an English word.

In the below sections, I will introduce the structure of transformer and the attention machanism.

For the detail explaination of key components in transformer and the details of attention, you can refer to the next article.

Structure

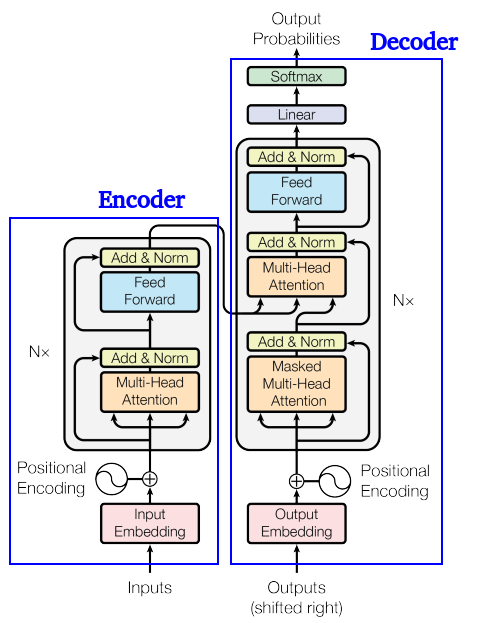

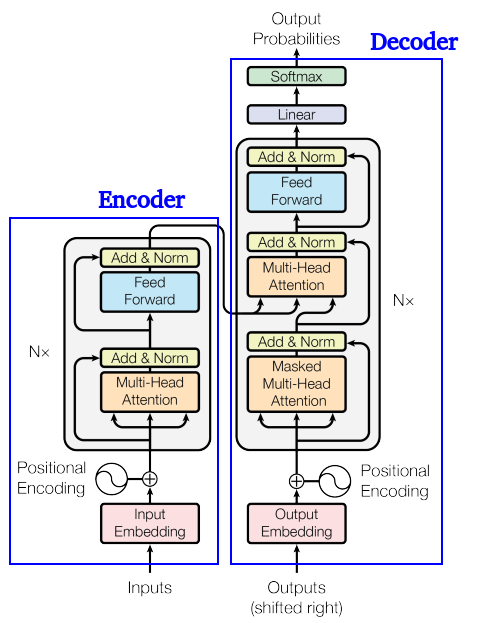

Below is the strucutre of Transformer.

The part on the left in the figure is the Encoder, and the part on the right is the Decoder.

You can notice that the structures of the left and right sides are actually quite similar.

The Encoder and Decoder usually contain many blocks with the same layer structure, and each layer will have multi-head attention and Feed Forward Network.

Encoder

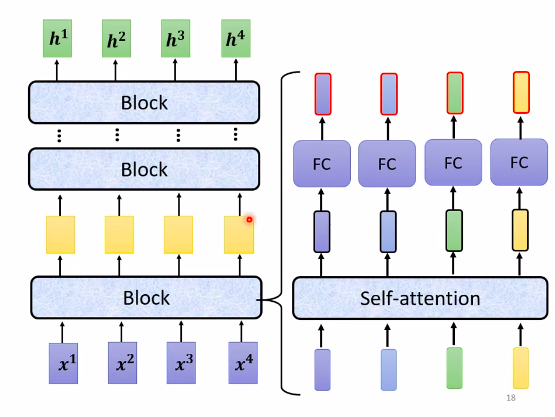

We just mentioned that the Encoder will be divided into many blocks.

We will first convert a whole row of input sequence data into a whole row of vectors, and then the processing steps of each block are as follows:

- Firstly, after considering all the input information of the input vector through self-attention, output a row of vectors. (I will introduce how self-attention considers all input information later)

- Throw this row of vectors into the feed forward network of Fully Connected (FC).

- The final output vector is the output of the block.

However, what the block does in the original Transformer is more complicated, the details are as follows:

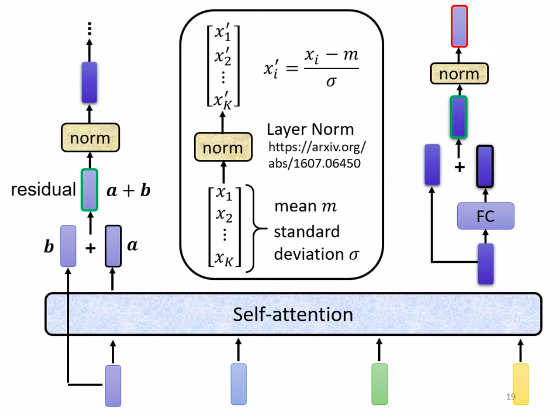

Suppose we will follow the method just now, and the output result of the input vector after self-attention is called a. we also need to pull the original input (we first call it b ) and add it to a to get a+b . Such a network architecture is called a residual connection.

After that, we will do layer normalization on the result of a+b. It will calculate the average $m$(mean) and standard deviation $\sigma$(standard deviation) of the input vector, and then calculate it according to the formula: divide the input minus the mean $m$ by the standard deviation $\sigma$. It’s here that we really get the input of the FC network.

The FC network also has a residual architecture, so we will add the input of the FC network to its output to get a new output, and then do layer normalization again. This is the real output of a block in Transformer Encoder.

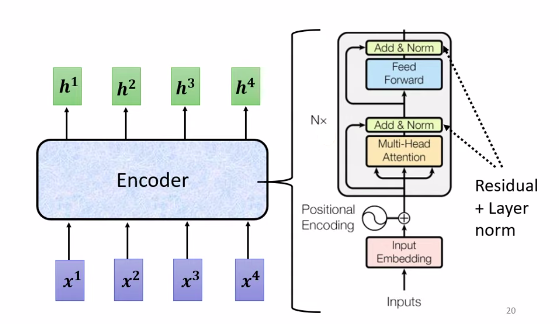

Now, let’s look back at the structure diagram of Encoder:

First, in the place of input, convert the input into a vector through Embedding, and then add positional encoding (because if only self-attention is used, there will be a lack of unknown information)

Next we see Multi-Head Attention, which is the block of self-attention and Add&Norm means residual plus layer normalization.

Finally, doing Add&Norm again after FC’s feed forward network is the output of the whole block, and this block will be repeated n times.

Decoder

Then let us look at the Decoder:

Input the sequence obtained at the previous time, and then perform the same Embedding and Positional Encoding and then enter the block repeated N times.

The difference is that there is an extra “Masked” (note the red box) in the Multi-Head Attention when we first entered. What does it mean?

Masked means that the model will only pay attention to the part it has already generated, and will not accidentally pay attention to the words generated in the future. Since the output of the Decoder is generated one by one, it has no way to consider its future input. It seems a bit vague to say this, and I will make it clearer when I talk about self-attention in the next article.

After repeating the block N times, after the Linear Layer and Softmax, we can get the output probability distribution we want, and we can sample according to this distribution or take the value with the highest probability to get the output sequence.

There is still one of the most critical self-attention mechanisms that has not been explained in detail. Let’s introduce how it pays attention to all input sequences and performs parallel processing in the next article!

Reference

- 3Blue1Brown

- iThome - Day 27 Transformer (Recommend)

- iThome - Day 28 Self-Attention (Recommend)

- Transformer 李宏毅深度學習 (Recommend)

- Transformer 李宏毅老師簡報

- 李宏毅老師YouTube channel

- Attention is all you need (paper)